Have you ever wondered how machine translation works? Few lines of code are enough to build tools similar to Google Translate, DeepL, etc. In this article, we will walk you through the process of creating a sequence-to-sequence (seq2seq) machine translation model. By the end, you'll be able to understand how it works, and how to have a deployable translation model.

Understanding the Seq2Seq Model

To grasp the concept of the seq2seq model, let's dive into an example. Imagine you have a sentence in English:

"How are you?"

and you want to translate it into Tamazight:

"Amek tettiliḍ?"

The seq2seq model consists of an encoder and a decoder, which work together to perform this translation.

-

Encoder: The encoder takes the source sentence, "How are you?", and processes it word by word. It encodes the information into a fixed-length vector called the context vector. In our example, the encoder would analyze each word and create a meaningful representation of the sentence.

-

Decoder: The decoder receives the context vector from the encoder and starts generating the target sentence, "Amek tettiliḍ?". It does this word by word, taking into account the context vector and the previously generated words. The decoder learns to generate the correct translation based on the patterns it discovers during training.

Data Preparation

Now that we have an understanding of the seq2seq model, let's talk about data preparation using the same example.

To train a machine translation model, a parallel corpus is required, which consists of aligned sentence pairs in both the source (English in our case) and target languages (Tamazight). Several resources like Europarl, and UN Parallel Corpus provide vast amounts of multilingual data.

-

Tokenization: The first step in data preparation is tokenization. We break down the English and Tamazight sentences into individual tokens or words. For example, the English sentence "How are you?" would be tokenized into ['How', 'are', 'you', '?'], and the Tamazight sentence "Amek tettiliḍ?" would be tokenized into ['SOS', 'Amek', 'tettiliḍ', '?', 'EOS']. We use SOS and EOS to indicate start and end of sequence.

-

Cleaning and Normalization: Next, we perform cleaning and normalization on the tokenized sentences. This involves removing any unnecessary characters, punctuation, or special symbols that might hinder the translation process. For example, we might remove the question mark at the end of both the English and Tamazight sentences to simplify the training data.

Depending on the characteristics of the source and target languages, additional language-specific preprocessing steps may be required. For example, in French, we might need to handle special characters like accents or diacritics.

- Vocabulary Creation: We create a vocabulary by collecting unique words from both the source and target sentences. Each word is then assigned a unique index or identifier, resulting in what we call a words embedding map, which will be used during the training process:

6: "how"

330: "are"

537: "you"

With that our tokenized example would look like this:

[6, 330, 537] # How Are You

[420, 775] # Amek tettiliḍ

- Sequence Padding: To ensure uniform sequence lengths, we pad the sentences with special tokens (e.g., "PAD", or 0) so that they all have the same length. Padding is necessary because neural networks typically expect fixed-length inputs. By adding padding tokens at the end of shorter sentences, we create equal-length sequences, allowing efficient training. The length would be the number of words of the longest input sentence.

For example applying a padding of 13 on our input and output tokens will give the following result:

[6, 330, 537, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] # How Are You

[420, 775, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] # Amek tettiliḍ

Model Training

With the data prepared, we can proceed with training our machine translation model. We split the data into training and validation sets. The training set is used to update the model's parameters during training, while the validation set helps us monitor the model's performance and prevent overfitting.

Neural Network Training

During training, we feed the model with the source sentences (English) as input and the corresponding target sentences (Tamazight) as the desired output. The model generates predictions for the target sentences, word by word, based on the input sequences. These predictions are compared to the actual target sequences using a loss function, such as categorical cross-entropy.

Backpropagation and Parameter Updates

Through the process of backpropagation, the model calculates the gradients of the loss with respect to its parameters. These gradients indicate the direction and magnitude of parameter updates needed to minimize the loss. The optimization algorithm, such as stochastic gradient descent (SGD) or Adam, uses these gradients to update the model's parameters iteratively, making the predictions more accurate over time

Iterative Training

The training process occurs iteratively over multiple epochs. In each epoch, the model goes through the entire training dataset, updating its parameters and fine-tuning its understanding of the translation patterns. By repeating this process, the model becomes increasingly proficient at generating accurate translations.

Validation and Evaluation

Throughout training, we periodically evaluate the model's performance on the validation set. This evaluation helps us monitor the model's progress and make adjustments if necessary. We can use metrics like BLEU (Bilingual Evaluation Understudy) to assess the quality of translations and compare them to the reference translations.

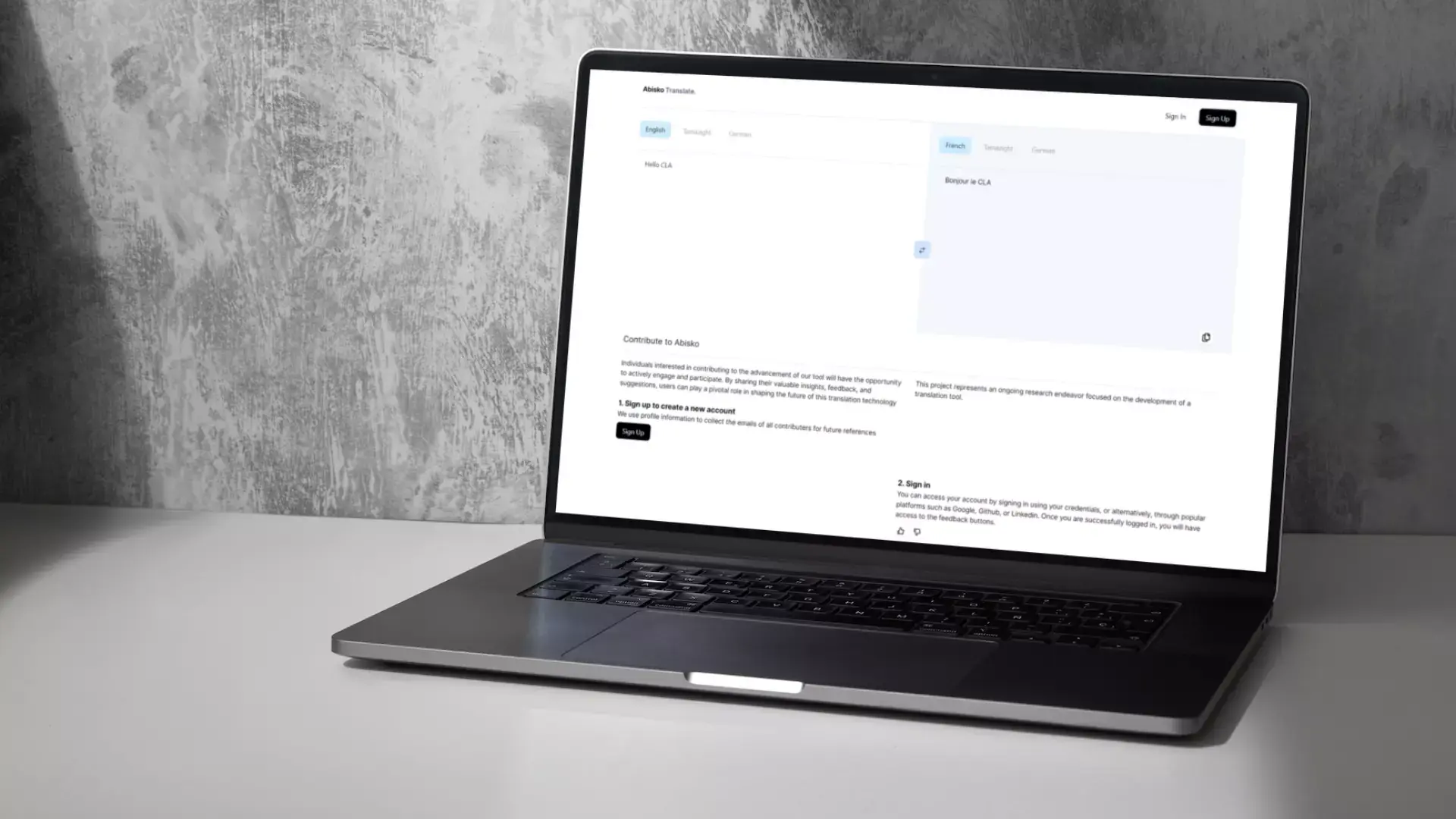

Deployment

Once the model is trained and evaluated, it is ready for deployment. TensorFlow provides several options for deploying machine translation models, including TensorFlow Serving, TensorFlow Lite, and TensorFlow.js. TensorFlow Serving allows serving the model through a REST API, enabling easy integration with other applications. TensorFlow Lite enables running the model on mobile devices with limited resources. TensorFlow.js enables deployment in web browsers, making the translation accessible to users directly on websites.

A web framework such as FastAPI can also be used to build a REST API.

You can also check our article on how to deploy your machine learning model for more details.

Continuous Improvement

Building a machine translation model is an iterative process. Monitoring user feedback, collecting additional data, and refining the model through regular updates are essential for continuous improvement. TensorFlow's flexibility and scalability make it easier to adapt the model to evolving requirements and new languages.

Master Machine Learning with Code Labs Academy! Join Our Online Bootcamp – Flexible Part-Time & Full-Time Options Available.